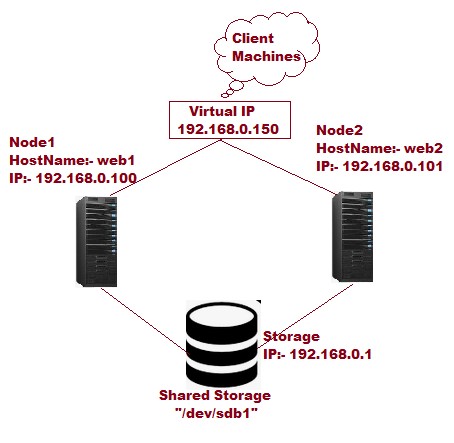

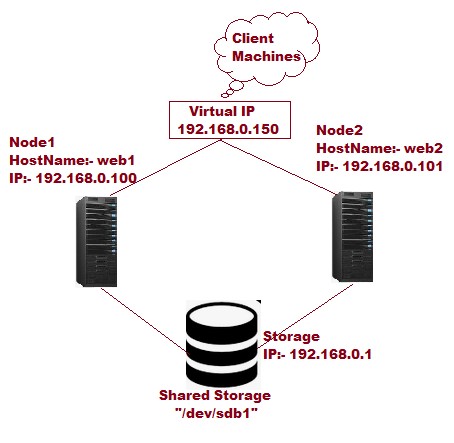

Configure High-Avaliablity Cluster on CentOS 7 / RHEL 7

Lab setup:-

Node1:- web1

IP:- 192.168.0.100

Hostname:- web1.feenixdv.com web1

Node2:- web2

IP:- 192.168.0.100

Hostname:- web1.feenixdv.com web1

Cluster Virtual IP:- 192.168.0.150

iSCSI(LUN):- /dev/sdb1

Node basic setup:-

Setup hostname

Check firewall and SELinux (In my lab its enabled)

Yum setup

Setup hostname on both node

[root@localhost ~]# hostnamectl set-hostname web1

Cross entry for each node and also check both node ping to each other with hostname.

[root@web1 ~]# cat /etc/hosts

192.168.0.100 web1.feenixdv.com web1

192.168.0.101 web2.feenixdv.com web2

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

[root@web1 ~]# ping web1

PING web1.feenixdv.com (192.168.0.100) 56(84) bytes of data.

64 bytes from web1.feenixdv.com (192.168.0.100): icmp_seq=1 ttl=64 time=0.038 ms

64 bytes from web1.feenixdv.com (192.168.0.100): icmp_seq=2 ttl=64 time=0.042 ms

^C

— web1.feenixdv.com ping statistics —

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.038/0.040/0.042/0.002 ms

In cluster setup we need some package with is presend in CD-ROM or ISO. Not in package folder(RHEL7). In “/mnt/addons/HighAvailability/” if CD-ROM mounted on “/mnt”.

We need to copy all the package(Package and HighAvailability) on one node and configure YUM using FTP so both node can install all package.

Going to install “iscsi-initiator-utils” on both node so node and mount shared storage “/dev/sdb1”.

[root@web1 ~]# yum install iscsi-initiator-utils -y

Loaded plugins: langpacks, product-id, search-disabled-repos, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Resolving Dependencies

–> Running transaction check

—> Package iscsi-initiator-utils.x86_64 0:6.2.0.873-35.el7 will be installed

–> Processing Dependency: iscsi-initiator-utils-iscsiuio >= 6.2.0.873-35.el7 for package: iscsi-initiator-utils-6.2.0.873-35.el7.x86_64

–> Running transaction check

—> Package iscsi-initiator-utils-iscsiuio.x86_64 0:6.2.0.873-35.el7 will be installed

–> Finished Dependency Resolution

Dependencies Resolved

============================================================================================================================================================================================

Package Arch Version Repository Size

.

.

.

Complete!

[root@web1 ~]#

Now going to discover storage from storage server.

[root@web1 ~]# iscsiadm -m discovery -t st -p 192.168.0.1

192.168.0.1:3260,1 iqn.2018-12.com.feenix:disk1

We found one storage. Put storage details in “initiatorname.iscsi”.

[root@web1 ~]# vim /etc/iscsi/initiatorname.iscsi

[root@web1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2018-12.com.feenix:disk1

[root@web1 ~]# systemctl enable iscsi

[root@web1 ~]# systemctl restart iscsi

Login into target Storage. Here we can see some issue in login. In this case just restart node and try after reboot.

[root@web1 ~]# iscsiadm -m node -T iqn.2018-12.com.feenix:disk1 -p 192.168.0.1 -l

Logging in to [iface: default, target: iqn.2018-12.com.feenix:disk1, portal: 192.168.0.1,3260] (multiple)

iscsiadm: Could not login to [iface: default, target: iqn.2018-12.com.feenix:disk1, portal: 192.168.0.1,3260].

iscsiadm: initiator reported error (24 – iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals

[root@web1 ~]# reboot

[root@web1 ~]# fdisk -l

Disk /dev/sda: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x00019b5f

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 616447 307200 83 Linux

/dev/sda2 616448 4810751 2097152 82 Linux swap / Solaris

/dev/sda3 4810752 41943039 18566144 83 Linux

Disk /dev/sdb: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 4194304 bytes

Disk label type: dos

Disk identifier: 0xd328a924

Device Boot Start End Blocks Id System

/dev/sdb1 8192 4194303 2093056 83 Linux

Here you can see “/dev/sdb1” accessible from node. Something check from other node also.

Through high availability here we are going to configure apache web server so first we need to install apache on both node.

[root@web1 ~]# yum install httpd -y

Update “httpd.conf” with below entry so it can we monitored. Put these entry in bottom of file.

[root@web1 ~]# vim /etc/httpd/conf/httpd.conf

.

.

<Location /server-status>

SetHandler server-status

Order deny,allow

Deny from all

Allow from 127.0.0.1

</Location>

Now mount storage on “/var/www/” and create one example page. Because SELinux enabled in so we need to restore same context for “html folder and index.html”.

After that allow on firewall and reload configuration.

[root@web1 ~]# mount /dev/sdb1 /var/www/

[root@web1 ~]# mkdir /var/www/html

[root@web1 ~]# vim /var/www/html/index.html

[root@web1 ~]# getenforce

Enforcing

[root@web1 ~]# restorecon -R /var/www/

[root@web1 ~]# umount /var/www/

[root@web1 ~]# firewall-cmd –permanent –add-service=http

success

[root@web1 ~]# firewall-cmd –reload

success

Now configure HA cluster. For that we need to install some package.

Also add in firewall.

[root@web1 ~]# yum install pcs fence-agents-all -y

[root@web1 ~]# firewall-cmd –permanent –add-service=high-availability

success

[root@web1 ~]# firewall-cmd –reload

Success

(On both node) To cross verify you can check “hacluster” user present or not.

If present then installation is ok. Otherwise you need to re-check all steps.

Now set password for hacluster user. Make sure password are same on both node.

[root@web1 ~]# cat /etc/passwd |grep hacluster

hacluster:x:189:189:cluster user:/home/hacluster:/sbin/nologin

[root@web1 ~]# passwd hacluster

Changing password for user hacluster.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

Start “pcsd” services and enable it.

[root@web1 ~]# systemctl start pcsd

[root@web1 ~]# systemctl enable pcsd

Created symlink from /etc/systemd/system/multi-user.target.wants/pcsd.service to /usr/lib/systemd/system/pcsd.service.

Authenticate all available node. In my lab setup we have only two node(web1 and web2).

[root@web1 ~]# pcs cluster auth web1 web2

Username: hacluster

Password:

web2: Authorized

web1: Authorized

setup cluster setting on both node(run only one node)

[root@web1 ~]# pcs cluster setup –start –name feenixdv_web web1 web2

Destroying cluster on nodes: web1, web2…

web1: Stopping Cluster (pacemaker)…

web2: Stopping Cluster (pacemaker)…

web1: Successfully destroyed cluster

web2: Successfully destroyed cluster

Sending cluster config files to the nodes…

web1: Succeeded

web2: Succeeded

Starting cluster on nodes: web1, web2…

web2: Starting Cluster…

web1: Starting Cluster…

Synchronizing pcsd certificates on nodes web1, web2…

web2: Success

web1: Success

Restarting pcsd on the nodes in order to reload the certificates…

web2: Success

web1: Success

[root@web1 ~]# pcs cluster enable –all

web1: Cluster Enabled

web2: Cluster Enabled

check status of cluster.

[root@web1 ~]# pcs cluster status

Cluster Status:

Stack: corosync

Current DC: web2 (version 1.1.15-11.el7-e174ec8) – partition with quorum

Last updated: Thu Dec 27 06:48:47 2018 Last change: Thu Dec 27 06:47:46 2018 by hacluster via crmd on web2

2 nodes and 0 resources configured

PCSD Status:

web1: Online

web2: Online

Check pacemaker status.

[root@web1 ~]# pcs status

Cluster name: feenixdv_web

WARNING: no stonith devices and stonith-enabled is not false

Stack: corosync

Current DC: web2 (version 1.1.15-11.el7-e174ec8) – partition with quorum

Last updated: Thu Dec 27 06:49:03 2018 Last change: Thu Dec 27 06:47:46 2018 by hacluster via crmd on web2

2 nodes and 0 resources configured

Online: [ web1 web2 ]

No resources

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

Now we are going to create three resources.

For system file(/dev/sdb1), for cluster VIP, apache config file and status.

All these resources are grouping with apache(–group apache).

[root@web1 ~]# pcs resource create HTTP_Mount_Point filesystem device="/dev/sdb1" directory="/var/www/" fstype="ext4" –group apache

[root@web1 ~]# pcs resource create HTTP_VIP IPaddr2 ip=192.168.0.150 cidr_netmask=24 –group apache

[root@web1 ~]# pcs resource create HTTP_URL apache configfile="/etc/httpd/conf/httpd.conf" statusurl="http://127.0.0.1/server-status" –group apache

Because the node level fencing configuration depends heavily on your environment, we will disable it for this tutorial.

[root@web1 ~]# pcs property set stonith-enabled=false

Note: If you plan to use Pacemaker in a production environment, you should plan a STONITH implementation depending on your environment and keep it enabled.

Now you can see all resources are added and started.

[root@web1 ~]# pcs status

Cluster name: feenixdv_web

Stack: corosync

Current DC: web2 (version 1.1.15-11.el7-e174ec8) – partition with quorum

Last updated: Thu Dec 27 07:04:10 2018 Last change: Thu Dec 27 07:03:58 2018 by root via cibadmin on web1

2 nodes and 3 resources configured

Online: [ web1 web2 ]

Full list of resources:

Resource Group: apache

HTTP_Mount_Point (ocf::heartbeat:Filesystem): Started web1

HTTP_VIP (ocf::heartbeat:IPaddr2): Started web1

HTTP_URL (ocf::heartbeat:apache): Started web1

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[root@web1 ~]#